Generative AI and the Bullshit Singularity

I haven’t forgotten about my promise to discuss the concept of facadeware. The slings and arrows of outrageous fortune continue their assault on me as I navigate, among other things, two relocations in two months. I want to write the series, and I plan to write the series, but I’ve been busy. Nonetheless, thanks to those who read the first installment and the smattering of donations that were eventually refunded.

Anyway, as I thought about how to continue that series, I realized that I’d have to talk about generative AI. In the year of our Lord 2025, if I were to avoid talking about GenAI for as long as 15 minutes, I’m pretty some kind of Harrison-Bergeron-universe agent would break into my house and electrocute me.

Generative AI Isn’t Facadeware

First, let me say that what I’m describing as facadeware predates generative AI’s explosion onto the mainstream in 2022. I also don’t think GenAI is an example of facadeware. At least, not exactly.

In the previous post, I briefly defined facadeware as “superficially advanced gadgetry that actually has a net negative value proposition.” And while GenAI clearly has a (to date and for the foreseeable future) net-negative value proposition, I wouldn’t categorize it as superficially advanced. It is genuinely advanced, and it is an impressive feat of experimentation and human ingenuity.

And so because of this, GenAI/LLM techs don’t really have a place in the facadeware series (though I think the concept of “agentic AI” does qualify). However, I want to dump my bucket on this subject both because I know people will invariably bring it up for discussion and because I think a relationship, if not direct, does exist.

The Role of Bullshit in GenAI and Facadeware

Bullshit, as a concept, plays a foundational but different role in both GenAI and in facadeware. The role of bullshit in facadeware is relatively simple. To sell anything with a net-negative value proposition, almost by definition, requires bullshit. Bullshit is the fuel of the facadeware engine.

GenAI kind of inverts this. With GenAI, the fuel of the engine is human ingenuity, and the output is bullshit. In other words, some of the best and brightest minds in all of enterprise Silicon Valley have produced a technological advancement that is to bullshit what cold fusion would be to energy output. It was an improbable and unexpected giant leap forward in humanity’s collective capacity to generate bullshit.

So if you were to look for a relationship between facadeware and GenAI, the most likely scenario is that you would either use GenAI to generate facadeware or simply to market it.

Defining Bullshit Somewhat Rigorously

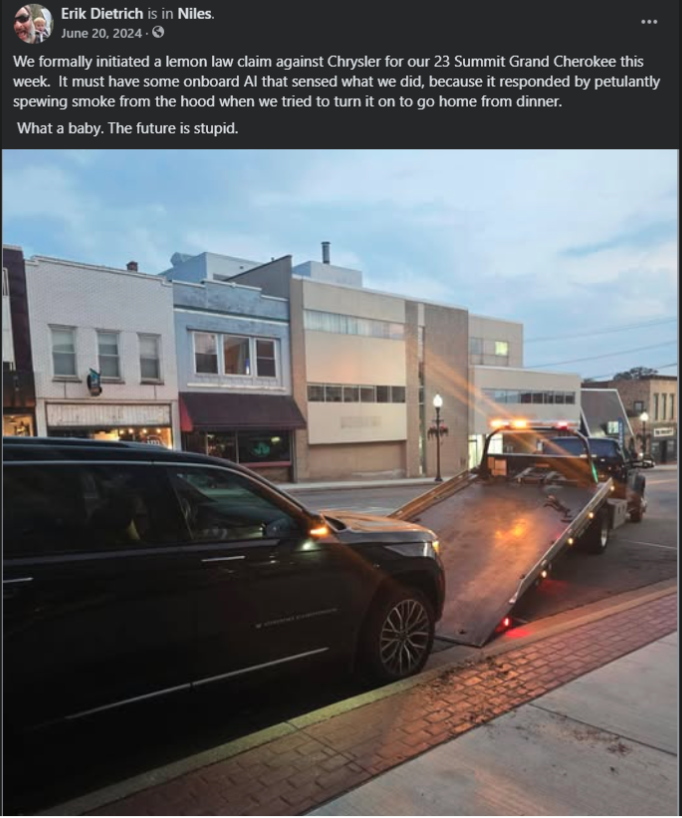

Now, before I go and make you think this is a simple exercise in Luddite shitposting, let me be clear that I actually have nothing against bullshit in moderation. Anything you do on social media is more or less bullshit, and plenty of self-soothing and self-indulgent narratives, like schadenfreude fantasies, are bullshit.

Now, let’s actually define bullshit with some precision before I lumber onwards with this rant. The dictionary in Google give us a short, sweet take:

The Inline Self-Deprecation Example

The Inline Self-Deprecation Example

Delegation as a Function of Org Chart

Delegation as a Function of Org Chart